Things I have been working on

Projects

Data Specialist for Production Plant

-

Centralized data from multiple sources into various databases using ETL (Alteryx) and SQL.

-

Designed and implemented data pipelines to automate the extraction, transformation, and loading (ETL) processes.

-

Utilized Alteryx for data preparation, blending, and analytics, ensuring data integrity and consistency.

-

Employed SQL queries for efficient data extraction, joining, and aggregation across different databases.

-

Developed dashboards and reports using Power BI to visualize production metrics and key performance indicators (KPIs).

-

Integrated data from SAP, MES (Manufacturing Execution Systems), and other operational databases into a centralized data warehouse.

-

Conducted data quality checks and validation to ensure accurate and reliable data for analysis.

-

Streamlined data workflows, reducing manual data handling, which resulted in saving up to 1000 human hours.

-

Provided data-driven insights to optimize production processes, enhance efficiency, and support decision-making.

-

Collaborated with cross-functional teams, including IT, operations, and finance, to align data strategies with business goals.

-

Created detailed Power BI dashboards that included interactive visualizations, allowing stakeholders to explore data and derive insights dynamically.

-

Utilized advanced Power BI features such as DAX (Data Analysis Expressions) and Power Query for complex data transformations and calculations.

Vehicle Detection Using IBM Clusters:

-

Led a team of data scientists to set up a multi-GPU environment on IBM's cluster.

-

Configured and optimized NVIDIA GPUs for parallel processing to accelerate deep learning model training.

-

Developed and implemented convolutional neural networks (CNNs) using PyTorch and TensorFlow for vehicle detection from drone images.

-

Applied transfer learning techniques using pre-trained models such as YOLOv5 and Detectron2 to enhance detection accuracy and reduce training time.

-

Preprocessed and augmented large datasets of aerial images to improve model robustness and performance.

-

Utilized IBM Watson for additional cognitive computing capabilities and integration with cloud services.

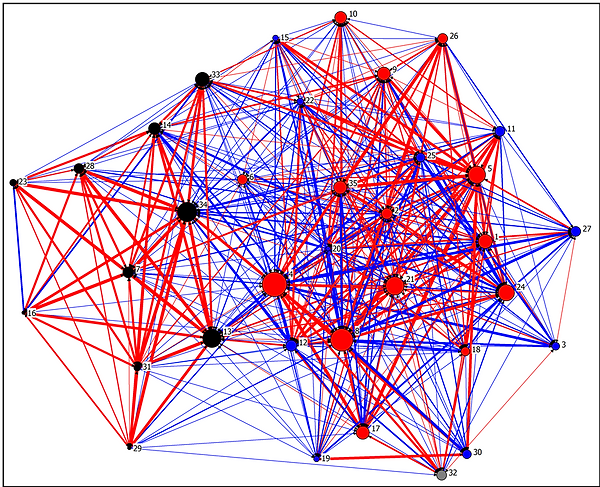

Staff Mobility Tool

-

Created a comprehensive tool to optimize staff mobility in urban areas using advanced algorithms and graph theory.

-

Developed bio-inspired algorithms, as ant colony optimization, to find optimal routes and schedules for staff transportation.

-

Implemented the solution in Python, leveraging libraries such as NetworkX for graph-based analysis and Scikit-Learn for machine learning tasks.

-

Integrated Google Maps API for real-time geolocation data, route planning, and traffic updates to enhance the tool's accuracy and efficiency.

-

Reduced transportation costs by optimizing travel routes and schedules, resulting in significant savings for the company.

E-commerce Automation:

-

Developed and deployed automation scripts to manage and update the affiliate customer database, enhancing efficiency and reducing manual workload.

-

Utilized Apache Airflow to create DAGs (Directed Acyclic Graphs) for orchestrating complex data workflows, ensuring smooth and reliable execution of automated tasks.

-

Established secure FTPS connections for data transfer, ensuring the confidentiality and integrity of sensitive information.

-

Extracted and processed data from internal APIs, leveraging RESTful services to gather and update information dynamically.

-

Collaborated closely with backend development teams to integrate automation scripts seamlessly into the existing e-commerce platform.

Instagram Automation Project:

-

Developed an automated system for managing an Instagram account, utilizing advanced AI models to generate and curate content.

-

Leveraged HuggingFace’s models for sentiment analysis , text generation and image generation ensuring relevant and engaging captions for posts.

Video Animations Using Deforum and Stable Diffusion:

-

Created innovative video animations utilizing Deforum and Stable Diffusion for generating frame-by-frame image sequences.

-

Used Stable Diffusion models to produce high-quality, AI-generated images based on specific prompts and themes.

-

Integrated Deforum’s animation capabilities to interpolate and transition between frames smoothly, creating fluid and dynamic videos.

-

Fine-tuned open-source models for specific styles and subjects, enhancing the artistic quality and relevance of the animations.

Stock Market Forecasting:

-

eveloped a Jupyter notebook for forecasting stock market trends using machine learning techniques.

-

Leveraged TensorFlow to build and train predictive models based on historical stock data, focusing on time-series analysis.

-

Utilized LSTM (Long Short-Term Memory) networks to capture the temporal dependencies in stock prices, improving the accuracy of forecasts.

-

Integrated financial datasets from sources like Yahoo Finance and Alpha Vantage, ensuring comprehensive and up-to-date information for model training.

-

Visualized forecast results using Matplotlib and Seaborn, providing clear and interpretable charts for stakeholders.

Stock Market Trading Script:

-

Developed an automated trading script using Alpaca API, EC2, and Python to execute trades based on predefined strategies.

-

Implemented technical analysis strategies, including moving average crossovers, Bollinger Bands, and momentum indicators, to identify trading signals.

-

Utilized Alpaca’s commission-free trading API for real-time market data access and order execution.

-

Deployed the trading script on AWS EC2 to ensure high availability and performance, allowing for 24/7 operation.

-

Utilized Pandas and NumPy for data manipulation and analysis, ensuring efficient processing of large financial datasets.